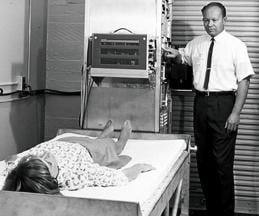

Godfrey Hounsfield created the prototype for the CT scanner.

Physicians at Northwestern Memorial Hospital in Chicago were as stunned in 1979 by Godfrey Hounsfield’s exclamation as Hounsfield was at the computed tomography (CT) image. “My word, what is that?” asked the inventor of computed tomography, who later that year would receive the Nobel Peace Prize for his invention.

Hounsfield may have been the only one in the room who didn’t recognize the brain hematoma, wrote Lee F. Rogers, M.D., in his recollection of that day in 1979.1 But, in looking back, that should not have been a surprise. “The inventor of an imaging technology does just that, invents,” Rogers wrote. “It is usually left for others to determine how and when this technology is best used.”

In the early 1980s, early adopters of magnetic resonance imaging (MRI) took a collective leap of faith that its life-like images would reveal pathologies. It was a good but expensive gamble. The first machines cost millions of dollars each. It took years of experience to demonstrate their clinical value.

About the same time, ultrasound was “good enough” for OB/GYN but not much else. Engineers had the know-how to produce high-performance scanners, but vendors believed customers would be too stingy to pony up the necessary money. They were wrong. An engineer envisioned a better future for ultrasound. But clinicians took the chance and, eventually, proved the images were worth the price.

Diagnosticians were at the center of these early developments. But long before nuclear medicine was an imaging tool, it debuted with an “atomic cocktail.” First mixed in 1946 from radioactive iodine, this cocktail cured a patient with thyroid cancer. Within a few years, clinicians discovered they could visualize the thyroid and other organs using atomic concoctions.

The eclectic story of medical imaging began, of course, much earlier, when Wilhelm Röntgen discovered the X-ray. The first to see “radiographs,” however, were not physicians. Röntgen, a physicist, introduced them in January 1896 at the 50-year anniversary meeting of the Society of Physics. Their clinical potential was recognized a few weeks later with the publication in a medical journal of a radiograph showing a glass splinter lodged in the finger of a 4-year-old. The commercialization and mass production of X-ray tubes spread this technology around the globe, and within a few years radiography was recognized as a great medical advance.

While this history, certainly, is about technology, it is also about the people and how they turned ideas into medical tools. They include giants: some known, some not; some modest, some brash. Among them are four musicians, one of whom in 1966 described himself and other band members as “more popular than Jesus.”

CT: From Beatlemania to Manic Slice Wars

Electric and Music Industries (EMI) may have owned the most famous recording studios in the world. Its Abbey Road Studios were the site of such Beatle hits as “Love Me Do,” “Can’t Buy Me Love” and “Revolution.”

Signed in 1962, the Beatles generated enormous profits for EMI, profits that some speculate gave EMI the flexibility to allow Godfrey Hounsfield the freedom to invent CT.

A dearth of financial data about EMI budgets, amid estimates that the British government may have contributed substantially more than EMI, have raised questions about how much credit is actually due to the Beatles for one of the greatest advances in medical imaging.2

One thing is for sure, however. Hounsfield did not invent CT on his own.

The British engineer built on decades of mathematical advances, notably the 1937 work of Polish mathematician Stefan Kaczmarz, who formulated the basis for a reconstruction method called “Algebraic Reconstruction Technique,” which Hounsfield adapted for use in CT.

Independently, while at Tufts University in Boston, South African-born Allan McLeod Cormack developed a method for CT. So significant were his efforts that the Nobel committee awarded the 1979 Nobel Prize in medicine jointly to Cormack and Hounsfield.

Then there was the clinical connection. CT could not have transitioned to mainstream medical practice without the involvement of James Ambrose, M.D., a consultant radiologist at Atkinson Morley Hospital in London. Ambrose joined Hounsfield in 1971. Together they used CT prototypes built under Hounsfield’s direction to look at animal and preserved human organs. In late 1971, the first clinical CT was installed at Atkinson Morley Hospital.

This machine, the EMI Mark 1, could scan only the brain. It was displayed in November 1972 at the Radiological Society of North America’s (RSNA) annual meeting. At this meeting, Ambrose delivered the first clinical results from the use of a CT scanner.

Soon after, Robert S. Ledley, DDS, a dentist-turned-biomedical researcher at Georgetown University, invented a whole-body CT. By the late 1970s the modality was ubiquitous in the medical practices of developed nations around the world.

With the fundamentals of CT in place, the modality evolved, as corporate engineering teams chipped away at problems unique to this modality. One was an unexpectedly high failure rate of gantry motors onboard CTs built and installed by GE Medical Systems. The problem was initially thought to come from too little lubrication. But adding more lubricant had no effect. It was then learned that the grease lubricating the motor shaft turned to glue after long exposures to X-rays. Changing the lubricant solved the problem.

As scans covered more of the patient, X-ray tubes overheated. Philips solved this problem in the early 1990s by repurposing its Maximus Rotalix Ceramic (MRC) tube, which had been developed for cardiology and vascular X-ray systems. With no ball bearings to create friction and the use of a liquid-metal alloy as a lubricant, tube cooling was tripled and the scan time of Philips’ CTs extended.

Periodically the industry leapt forward. In a radical departure from the status quo, Douglas Boyd, Ph.D., pioneered the use of electron beam tomography in the mid-1980s.

Electron beam tomography (EBT) scanners used a magnetically controlled electron beam to fire a thin circle of X-rays. Unfettered by the mechanical limitations of a conventional CT gantry, EBT systems delivered motion-free images of the heart and surrounding blood vessels. Its stratospheric price tag, north of $2 million, however, limited worldwide sales to about 150 scanners until Imatron, the company that commercialized EBT, was sold to GE Medical in 2001.

In contrast, the advent of helical scanning had a profound impact on the modality. This leap, taken in 1989, made “step-and-shoot” CT scanning all but obsolete. The step-and-shoot gantry fired X-rays for a single rotation, then waited for the table to “step” the patient to the next position. Helical CT gantries rotated continuously, as the table propelled the patient through the gantry, slicing the patient into one continuous spiral. Subsecond rotations and thinner slices led to shorter scans and higher resolution images with fewer artifacts.

This advance, pioneered by Siemens’ R&D director, Willi A. Kalender, Ph.D., was adopted first by the German multimodality vendor. GE soon followed suit, as did other makers of CT.

The next revolution — multislice scanning — took the CT community by storm in late 1998, when Toshiba, Siemens and GE launched proprietary versions within weeks of each other. The appearance of these machines triggered nearly a decade of heated competition among vendors to produce scanners with more and more slices per rotation. Quad-slice scanners gave way to ones that generated eight slices. In time, these were eclipsed by ones with 16 and then 32. In the mid-2000s, the industry achieved a milestone — commercial CT scanners that delivered 64 slices per rotation, marking the height of the industry’s slice wars, as these scanners for the first time allowed routine cardiology applications.

The quad-slice scanners of Toshiba, GE and Siemens are widely recognized as having kicked off the modern age of CT imaging. Yet, their release was preceded seven years earlier by the CT-Twin, a dual-slice scanner from Elscint. The Israeli firm invented multislice scanning. But Philips has since usurped its claim; a muddled corporate history of mergers and acquisitions is the reason.

Stricken by poor sales, Elbit Medical Imaging, the parent of Elscint, began selling its imaging assets in the late 1990s. One of those, Elscint’s CT business, was sold to Picker International. In 1999, Picker renamed itself Marconi Medical Systems, which two years later was sold to Philips, thereby laying the basis for Philips’ claim to have invented multislice scanning.

Applying similar logic, GE could claim to have invented CT. In 1980, the company bought EMI, which had merged previously with another company to become Thorn EMI. Thus far, GE has shown no inclination, however, to make such an assertion.

MRI: Great Aspirations, Less Than Humble Beginnings

In many ways, Raymond Damadian, M.D., has been larger than life. When his MRI company Fonar was still exhibiting at the RSNA meeting, placards atop its booth attested to that. Composed of personal photos, including one of Damadian in 1988 accepting the National Medal of Technology and Innovation from President Ronald Reagan, the company founder was impossible to ignore, as GE found out, much to the chagrin of its executives and shareholders. In 1997 GE paid Fonar $128.7 million in damages and interest after a jury concluded that GE had infringed MRI patents held by Fonar.

No one, therefore, should have been surprised when Damadian launched a very public campaign against the Nobel Committee when he was not among those honored for developing MRI with the Nobel Prize in physiology or medicine in 2003. Physicists Paul Lauterbur of the University of Illinois at Urbana-Champaign, and Peter Mansfield of the University of Nottingham, won.

Ads in the Washington Post and other newspapers featured pictures of the Nobel medallion upside down and text arguing the Nobel committee had gotten it wrong. Damadian was just as forthright in interviews: “If I had never been born, there would never be MRI today,” he told Nature.3

How the committee made its decision will not be known publicly for a long time. Deliberations for Nobel Prizes are sealed for 50 years. A proponent of Damadian has opined, however, that “possible purported reasons for his (Damadian’s) rejection have included the fact that he was a physician, not an academic scientist, his intensive lobbying for the prize, his supposedly abrasive personality and his active support of creationism.”4

What is not speculative is that Damadian was among the first to propose the use of nuclear magnetic resonance (NMR) to produce medical images. A 1971 paper he wrote in Science documented that cancerous and healthy tissue can be differentiated in laboratory rats using NMR.5 Damadian later patented a technique to scan the body for tumors by taking a series of NMR readings from different spots. The company he founded was the first to win U.S. Food and Drug Administration (FDA) approval for an MR scanner to be sold in the United States.

Conversely, proponents of Lauterbur and Mansfield say the two men made contributions that were essential to clinical MRI. In March 1973, Lauterbur published a paper in Nature titled “Image Formation by Induced Local Interaction; Examples Employing Magnetic Resonance.” He described this technique, which he called zeugmatography (from the Greek zeugmo meaning joining), as the joining of a weak gradient magnetic field with a stronger main magnetic field. This allowed the spatial localization of two test tubes of water, depicted in an image generated using a back projection method. MRI experts say this experiment moved NMR from one-dimension to two, thereby providing the foundation for clinical MRI.

Mansfield expanded upon Lauterbur’s work, developing a way to use magnetic gradients to precisely identify differences in the resonance signals. Mansfield’s work led directly to “echo-planar” imaging, the mainstay of clinical MRI, which turned image processing from an hour-long task to one that could be completed in less than a second.

Dozens of companies flooded the MRI space in the late 1970s and 1980s. Among them was EMI, which collaborated with Mansfield. The British giant abandoned those efforts in the early 1980s, however, selling the assets to Picker International.

Philips began exploring MRI with a pilot project launched in the mid-1970s, building a prototype that generated human images in 1978. Ironically the company was among the last to enter the U.S. marketplace, gaining FDA approval for a 1.5T system in 1986.

Damadian’s Fonar was the first to market in the United States followed by Technicare (a subsidiary of Johnson & Johnson) and Picker. GE weighed in soon after, quickly becoming this country’s major supplier of commercial MRI systems.

Although GE is widely known for its 1.5T Signa, which in the 1980s set the benchmark for image quality, the company experimented with a dizzying variety of field strengths, including 0.12T, 0.3T, 0.5T, 1T, 1.3T, 1.4T and 1.5T.

Various companies produced scanners at different field strengths. Bruker specialized in ones used for research, focusing on field strengths at 2.0T and above. Instrumentarium specialized in ultra-low field scanners, seeking a moderately priced alternative to high-cost MRI scanners. Diasonics produced ultra-low, low and mid-field systems that were “open” rather than cylindrical, as was typical.

In the 1990s, open mid-field systems were popular not only for their lower cost, but because they addressed patient complaints about claustrophobia. Hitachi rose to prominence with its successful marketing in the United States of Airis, a family of open scanners that operated at 0.3T. By the end of the decade, every major MRI vendor offered at least one open design.

Today, demand for open mid-field systems has all but evaporated. Two manufacturers — Philips and Hitachi — continue to make a high-field open product, Philips Panorama at 1.0T and Hitachi’s Oasis at 1.2T. Quenching demand for open systems has been short- and wide-bore cylindrical products.

The new high-field standard is 3.0T; the workhorse is 1.5T. The latest major development in MRI is its hybridization with positron emission tomography (PET), a modality that more than a decade earlier combined with CT.

Molecular Imaging: Splitting the Atom, Combining Modalities

In the Atomic Age that followed World War II, peaceful uses for splitting the atom seemed reasonable. Nuclear energy was supposed to be the source of unlimited achievement in the post-war era, fueling not only terrestrial power plants but interstellar spacecraft, airplanes and even cars. Atomic bombs would clear ground for new roads and frack open natural gas reserves. Lost golf balls would be a thing of the past, as radioactive shells would reveal their presence no matter how thick the rough.

It was in this context of unfettered optimism that the American public first learned in 1946 that an “atomic cocktail” had cured thyroid cancer. The thyroid had absorbed radioactive iodine that killed the cancer.

Later in the 1950s, this weapon of “mass” destruction would be used in low doses to measure the function of the thyroid and identify disease in this gland. In the decades ahead, other radioactive elements would be used to trace metabolic processes.

Nuclear medicine blossomed in the 1960s, uncovering cancer hot spots in the lungs. By the next decade it was visualizing hot spots throughout the body — in the liver and spleen, brain and GI tract. In 1971, the American Medical Association formally recognized nuclear medicine as a specialty. Its radiotracers were used commonly to evaluate heart function, blood clots in the lungs, bone pain, infection, liver, kidney and bladder function, even orthopedic injury.

Nuclear medicine is typically seen as a development of the Atomic Age, but its beginnings go back much further to the discovery of radioactivity by Antoine Henri Becquerel, Marie Curie and her husband, Pierre. All three shared the Nobel Prize in physics in 1903 for their work.

In 1935, Jean Frédéric Joliot-Curie and Irène Joliot-Curie shared the Nobel Prize in chemistry for their synthesis of new radioactive elements. The radioactive iodine they synthesized for the atomic cocktail of 1946 was later used to image the thyroid gland, quantify thyroid function and treat patients for hyperthyroidism.

Following this was the discovery in 1937 of technetium-99m by Carlo Perrier and Emilio Segre. This element, found in a sample of molybdenum bombarded by deuterons, was the first element artificially produced. (Its origin explains its name rooted in the Greek technetos, meaning artificial.)

The molybdenum generator was developed about 25 years later for the supply of technetium-99m. This generator was critically important to the widespread use of technetium, as the element’s six-hour half-life made long-term storage impossible.

Just as the range of radionuclides has come a long way, so have the technologies used to record them. In the beginning, scans were performed using a Geiger counter positioned near the organ of interest.

The first images were produced in 1950 using a rectilinear scanner, developed by Benedict Cassen, who became known as the father of body organ imaging. His first automated scanning device — a motorized, scintillation detector coupled to a printer — generated images of radioiodine absorbed by the thyroid gland. This type of scanner was used until the early 1970s with various radiopharmaceuticals to visualize organs throughout the body.

Later in the 1950s, Hal O. Anger followed with the development of a scintillation camera that allowed dynamic imaging of human organs. The Anger camera, as it came to be known, was first displayed at the Society of Nuclear Medicine annual meeting in 1958, yet was not commercially produced until the early 1960s by Nuclear Chicago Corp. of Des Plaines, Ill. Siemens refined the Anger camera after acquiring Searle Analytic in 1979, which nine years earlier had purchased Nuclear Chicago.

These early cameras delivered planar images. Today, single-photon emission computed tomography (SPECT) offers advantages in contrast, spatial localization and overall detection of abnormal function. The concept underlying SPECT goes back to the work of David E. Kuhl and Roy Edwards who, in the late 1950s, began taking cross-sectional images of radioisotopes in the body. Kuhl is credited with the development of SPECT, producing the first tomographic images of the human body in the mid-1970s. His work cleared the way for positron emission tomography (PET).

The development by Michael E. Phelps in 1973 of the first PET system and the synthesis several years later of 18F fluorodeoxyglucose (18F-FDG), in turn, laid the groundwork for modern PET in oncology.

Because cancer cells metabolize glucose at 10 times the rate of normal cells, malignant tumors appear as bright spots on PET scans. Similarly the commercial development of rubidium-82 in the late 1980s made myocardial perfusion imaging possible.

PET remained an elite tool throughout the 1990s. Its clinical use was hamstrung by the need for a cyclotron to produce positron emitters; the expense of acquiring and operating the cyclotron; the high cost of rubidium versus the relatively low cost of cardiac SPECT; and the capital expense of the PET scanner itself. Most limiting, however, was the lack of localization with PET.

The latter problem was solved in 1998 with the hybridization of PET with CT by David W. Townsend, Ph.D, at the University of Pittsburgh and Ron Nutt, Ph.D., then president of CTI PET systems. This flung wide open the flood gates for metabolic/anatomic imaging of cancer patients in the early 2000s. The first prototype PET/CT scanner, designed and built by CTI PET Systems in Knoxville, Tenn. (now Siemens Molecular Imaging), began operating in 1998.

Several factors ignited a manufacturing frenzy of PET/CTs a few years later. One was a regulatory mechanism for FDA approval of PET radiopharmaceuticals. This cleared the way for third-party coverage, led by Medicare. Individual sites and networks soon sprang up to supply cyclotron-produced FDG. In the wake of these developments, PET/CT quickly became the go-to modality for many types of oncologic diagnosis and follow-up.

The commercialization of SPECT/CT followed. Its adoption is only now beginning to gain momentum. Ironically this hybridization preceded PET/CT by several years. Bruce Hasegawa, Ph.D., directed the first such combination, building a prototype SPECT/CT in the early 1990s at the University of California, San Francisco.

In a further irony, nuclear medicine — once empowered by its connection to atomic energy — began laboring under this association following the nuclear calamities at Chernobyl and Three Mile Island. Molecular imaging is now the preferred moniker, as opinion leaders in this specialty seek a linkage between this term and “personalized medicine.”

Radiography: X-rays Unveil a Hidden World In and Out of Medicine

Wilhelm Röntgen discovered X-rays in late 1895. But he probably was not the first to produce them.

Back then the leading inventors of the late 19th century were experimenting on cathode vacuum tubes of the type Röntgen used when he made his earth-shattering discovery. Among them: Thomas Edison, Nikola Tesla, Heinrich Hertz and William Crookes.

In fact, Röntgen was working with a vacuum tube developed by Crookes when he noticed fluorescence occurring in a nearby barium platinocyanide screen and traced the radiation back to the tube.

Soon after, with his wife’s hand as a model, Röntgen demonstrated the potential of X-rays (short for “unknown” or “X” radiation) to reveal what lay beneath the skin. The medical utility of X-rays in orthopedics and surgery was soon demonstrated. Enabled by Röntgen’s refusal to patent the process for making X-rays, inventors and entrepreneurs swarmed the fledgling industry.

Months after Röntgen’s discovery, an Englishman, Sir Herbert Jackson, designed the first X-ray vacuum tube. American physicist Michael Pupin developed a fluorescent screen to shorten exposure time and improve the image. This would evolve eventually into the fluoroscope, just as Carl Schleussner’s use of a silver bromide-coated glass plate would lead to radiographic film.

Early X-ray tubes were succeeded by the hot cathode, high-vacuum X-ray tube. Invented in 1913 by William Coolidge, this tube would be named for its inventor. Along with a moveable grid, created by Chicago radiologist Hollis Potter, the Coolidge tube made radiography invaluable World War I.

With X-rays, surgeons dispensed with the use of probes, as they preoperatively honed in on bullets, shrapnel and other foreign bodies embedded in soldiers. In orthopedics, X-rays helped in the diagnosis of fractures.

Not all radiographs, however, were easy to read. Sometimes tissues or objects obscured what was behind them. In 1916 a French dermatologist, Andre Bocage, drafted to serve in WWI, developed a method whereby X-ray exposures taken at different angles would overcome this shortcoming. His method, called tomography, would become a standard method in radiography and the basis for CT, as well as modern day breast tomosynthesis.

In breast tomo, the X-ray tube and paired detector are driven by a motor to a series of points along an arc. Ultra low-dose exposures at each point create images that are then processed and compiled into a tomogram. Further processing creates a synthetic digital mammogram. Together these two digital images boost diagnostic confidence and reduce patient recalls, while keeping radiation exposure of the patient at the same level as mammography alone.

Whereas contemporary users of ionizing radiation assiduously avoid exposure, the pioneers of radiography often exposed their hands to gauge the penetrating power of the tubes with which they worked. Radiation burns were common to operators and patients, as exposures often went on for extended periods.

One such case involved a head radiograph taken in July 1896, for which the patient was exposed to X-rays for 14 hours. Within days, the patient’s head was covered with sores; his lips were swollen, cracked and bleeding; his right ear had doubled in size; and hair on the right side of his head fell out.

Clarence Dally, a glassblower in Thomas Edison’s laboratory regularly exposed to X-rays as part of his work, had both arms amputated after developing skin cancer. He died in 1904 at age 39 of metastatic carcinoma. John Hall-Edwards is notable for having taken the first X-ray during surgery and later, lost his left arm to skin cancer. Wolfram C. Fuchs, who made the first X-ray film of a brain tumor in 1899, died of cancer in 1907.

Heinrich Ernst Albers-Schönberg documented in 1904 that exposure to X-rays could damage the reproductive glands of rabbits. He was one of the first to use radiation protection devices, as well as procedures and equipment for radiation/dose assessment.

Despite such warnings, radiographic devices were widely used — and not just for medical purposes. In the 1920s, X-ray machines could be found in beauty shops across the country for the removal of unwanted facial hair on women. These machines fired X-rays directly into the cheek and upper lip. Some 20 treatments were typically involved. In 1929, the American Medical Association warned of injuries being manifested as pigmentation, wrinkling atrophy, keratoses, ulcerations, carcinoma and death.

In the 1940s and early 1950s, shoe salesmen flipped a switch and shoppers could see their toes wiggling on fluoroscopes. At their height, some 10,000 of these devices were in use at shoe stores across the United States. X-rays, emitted by a tube mounted near the floor, penetrated the shoes and feet, then struck a fluorescent screen on the other side. The image was reflected to three viewing ports at the top of a cabinet where the customer, salesman and a third person could see the results.

By 1970, the practice was heavily regulated or banned in all 50 states. Yet one system was discovered operating in 1981 at a West Virginia store. When informed that the practice was banned by state law, the store donated the machine to the FDA.

Despite risks and misuse, there was good reason to celebrate the arrival of radiography. Properly applied, X-rays allowed noninvasive and painless disease diagnosis and therapy monitoring. They made possible medical and surgical treatment planning, and provided real-time guidance for the insertion of catheters, stents and other devices inside the body, as well as treating patients with tumors.

The widespread adoption of digital X-ray technologies at the turn of the century improved image quality thanks largely to software enhancement. It also made radiography more efficient with the elimination of film processing and the storage of electronic images.

Today digital images are sent throughout the healthcare enterprise via picture archiving and communication systems (PACS). PACS first appeared at the 1984 RSNA meeting, but were more proof of concept than product. Workstations cost hundreds of thousands of dollars. Mass storage required hundreds of optical disks. A robotic jukebox, developed in the late 1980s and capable of finding and reading a terabyte of data, was priced at more than a million dollars.

In the early 1990s, a comprehensive PACS would have cost millions, yet would have been compatible with only a minority of medical images. The American Society of Radiologic Technologists estimates that radiography examinations today represent 74 percent of all radiologic examinations performed on adults and children in the United States.

Given the cost and logistical challenges, “mini” PACS were developed. Some managed MRI and CT images. Others were specialized for nuclear medicine or ultrasound. Digital X-ray systems undercut demand for such truncated systems by producing the fodder for full-blown PACS, just as the means for storage and data transfer were becoming economically viable.

Getting to this point took a long time, however. Early attempts at digital X-ray go back to the 1970s and the use of selenium-coated metal plates. This method, called xerography, produced digital images of the breast, chest, temporomandibular joint, teeth and skull. But because dust or even moisture could degrade the image, its appeal was limited.

Various other technologies were tried. One digitized analog images using fiber optics that piped light flashes from a scintillator to charge coupled devices. These CCDs turned the flashes into electrical signals.

Another, called computed radiography (CR), used phosphor plates to record X-ray strikes. The plates were processed into images using laser light. CR systems were widely adopted in the early 2000s as a means for reducing costs related to film, as well as improving efficiency through the transmission and storage of images using PACS.

But radiography did not enter the modern digital age until the mass adoption of flat panel detectors. These were comprised of either amorphous silicon or selenium. Silicon panels recorded light flashes produced when X rays struck a scintillator. Selenium panels turned X-ray strikes directly into electrical signals.

In the mid- to late-1990s, flat panels were very expensive and their cost rose in proportion to their size. Consequently, they were first widely adopted when built into products that were relatively cost insensitive or required relatively small panels — or both. Cardiovascular X-ray systems were already high-priced; digital mammography units were new. Purchasers, therefore, were more accepting of the costs associated with flat panels. Additionally, the systems were stationary and, unlike radiography suites, required no shuffling of plates, which then were vulnerable to breakage.

Today, flat panels are available on all types of X-ray systems, even portables, which are jarred daily coming off hospital elevators and going through doorways. New flat panel designs have improved durability, as costs have come down with mass production and improved manufacturing.

Image processing has cut patient radiation exposure, while maintaining image quality. Continuing advances promise further cuts. This trend is in keeping with the ALARA principle, which calls on providers to administer doses of ionizing radiation that are “as low as reasonably achievable.”

It is a far cry from the early days of radiography.

Read the realted article "The Early Years of X-Rays and Informatics."

References:

5. Damadian R. “Tumor Detection by Nuclear Magnetic Resonance.” Science 171, 1151–1153, 1971.

Greg Freiherr has reported on developments in radiology since 1983. He runs the consulting service, The Freiherr Group. Read more of his views on his blog at www.itnonline.com.

March 19, 2025

March 19, 2025