Two Technologies That Offer a Paradigm Shift in Medicine at HIMSS 2017

A view through a HoloLens augmented reality visor showing an overlaid brain image co-registered with a live patient on a table at HIMSS 2017.

Information technology (IT) is among the least sexy areas to cover in medical technology advances, and is often difficult to find really interesting news as I sift through more than 1,300 vendors at the massive annual Health Information and Management Systems Society (HIMSS) conference. However, at this year’s conference I found two exciting new technologies that I feel have the potential to become paradigm shifts in medicine. The first is the integration of artificial intelligence into medical imaging IT systems. The second, and coolest tech at HIMSS, was the use of augmented reality 3-D imaging visors to create a heads-up display of 3-D imaging anatomical reconstructions, or complete computed tomography (CT) or magnetic resonance imaging (MRI) datasets surgeons can use in the operating room (OR).

The medical imaging applications of augmented reality were shown by two vendors at HIMSS, although there were dozens of booths that had the same Microsoft HoloLens augmented reality visors with fun activities in attempts to draw in attendees. TeraRecon debuted its cloud-based augmented reality solution, the HoloPack Portal, which extends the TeraRecon 3-D viewing of CT scan anatomical reconstructions to true 3-D projected in a headset viewer to provide real 3-D image viewing using the Microsoft HoloLens visor. The system uses voice commands and finger movements to enlarge, shrink or rotate the 3-D images so surgeons do not have to break the sterile field in the OR.

Novarad showed a similar work-in-progress system using the HoloLens that allowed attendees to see registered CT and MRI datasets overlaid on a live patient on a table. Using hand and finger gestures in the air in front of the visitor, the user can go through the dataset slice by slice, or change the orientation of the slices. Novarad also showed a video of how the system would work in a real OR so surgeons can see the underlying anatomical structures for real-time navigation without needing to look at a reference screen across the room and ask someone else to change the view to what they need.

After attending HIMSS, I actually feel energized about the prospects artificial intelligence (AI) may offer medicine. But, unlike the science fiction image that snaps into most people’s minds when you talk about AI, it will not be a cool, interactive, highly intelligent robot that will replace doctors. In fact, most users will not even be aware AI is assisting them in the backend of their electronic medical record (EMR) systems. AI is a topic that has been discussed for a few years now at all the medical conferences I attend, but I saw some of my first concrete examples of how AI (also called deep learning or machine learning) will help clinicians to significantly reduce time and workflow efficiency. AI will accomplish this by working in the background as an overlay software system that sits on top of the PACS, specialty reporting systems and medical image archives at a hospital.

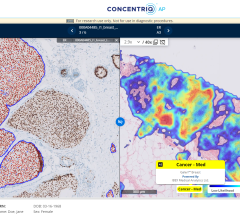

The AI algorithms are taught through machine learning to recognize complex patterns and relations of specific types of data that are relevant to the image or disease states being reviewed. In one example I saw from Agfa’s new integration of IBM Watson’s AI, the system was smart enough to look at a digital X-ray image and realize the patient had lung cancer and evidence of prior lung and heart surgeries. It automatically searched for specific records for the patient from oncology treatments, cardiology, prior chest exams from various modalities, recent lab results and relevant patient information on their history of smoking.

Philips Healthcare showed its Illumeo adaptive intelligence software, which uses AI to speed workflow. The example demonstrated was for oncology, where a computed tomography (CT) exam showed several tumors. The user can hover and click on a specific piece of anatomy on a specific slice and orientation. The system then automatically pulls in prior CT scans of the game region and presents the images from each exam in the same slice and orientation as the current image. If the AI determines it is a tumor, the system also runs auto quantification of the tumor sizes from all the priors and presents them in a side-by-side comparison. The goal of the software is to greatly speed up workflow and assist doctors in their tasks.

AI is also making its appearance in business and clinical analytical software, as well as imaging modality software, where it can automatically identify all the organs and anatomy, orientate the images into the standard reading reviews and perform auto quantification. This is already available on some systems, including echocardiography for automated ejection fractions and wall motion assessments.

Watch the VIDEO from HIMSS 2016 "Expanding Role for Artificial Intelligence in Medical Imaging."

Read the article from HIMSS 2017 "How Artificial Intelligence Will Change Medical Imaging."

August 29, 2024

August 29, 2024